Warp speed deep dive: Sustaining 48x speed with 90% less spend

Our team was recently given a green light to decouple a critical component from our existing legacy system (the “ABM platform”). Our mission was to rebuild it as a brand-new service, codenamed the ‘Warp Speed Loader’ (WSL) service, running in its own dedicated AWS account.

This gave us a rare opportunity to start fresh. Our goals were simple but ambitious: decouple from the monolith, deploy faster, and build a foundation that would let us iterate at high speed. This post is a continuation of the architectural journey recently shared by my colleague, Josh Cason, in his post: Warp Speed Loading: 48x Faster Data at Demandbase.

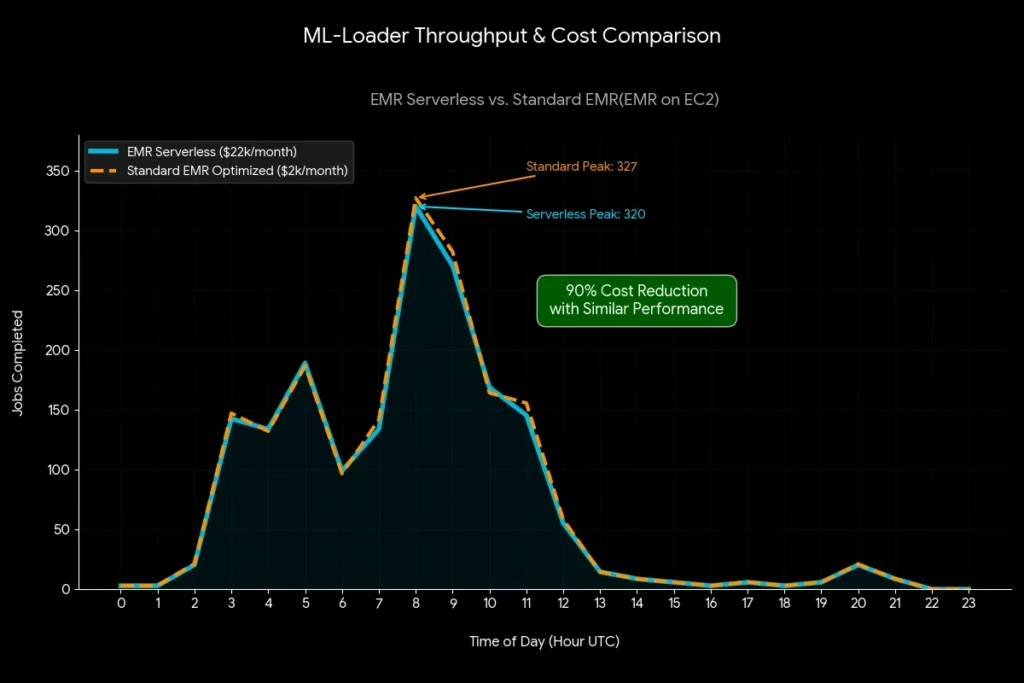

While Josh covered the 48x speed gains, this deep dive goes beyond the speed to explore the compute optimizations and engineering guardrails we built to sustain that performance. Here is how we navigated the “day two” operational realities to achieve a massive 90% reduction in our infrastructure costs.

Phase 1: Clean Decoupling with SQS

The first critical decision was how to decouple our new service loader from the legacy platform without re-inventing the wheel. We needed a simple, reliable way to send job metadata—not the massive tenant data itself, but the “what to run” instructions—from the old system to the new.

We quickly narrowed the field to SQS and Kafka.

After a few quick proof-of-concepts based on our model-scoring workloads, we chose SQS.

Here’s why:

- Fits the Bill -Simplicity & Multi-Tenant Processing: It just works and fits perfectly into our AWS-native ecosystem without the operational overhead of managing a Kafka cluster. This setup robustly supported our multi-tenant approach by allowing us to implement tenant grouping and 30-minute batch processing within the consumer logic using Standard SQS queues.

- Right-Sized for the Job: Our use case involves running ~1,500-2,000 model scores across all tenants. This metadata is critical but doesn’t require the high-throughput, streaming-log capabilities of Kafka. SQS was a perfect fit, and we avoided over-engineering our new WSL service.

We quickly created an SQS queue in our new AWS account and built producers on the legacy platform and consumers in our new infrastructure. This pattern gave us a clean decoupling point and allowed our team to iterate independently without constantly touching the legacy ABM code.

Phase 2: The MVP with EMR Serverless

With our data pipeline defined, we needed to build and run our Spark applications. Our initial design goal was speed—we wanted to get an end-to-end MVP (Minimum Viable Product) running as fast as possible.

This led us to EMR Serverless. It was the perfect tool for our V0, allowing us to build and deploy our Spark jobs immediately without investing time in provisioning, configuring, and managing standard EMR infrastructure.

The fully managed environment significantly boosted developer productivity. In addition, serverless improved Spark job execution time by removing cluster cold starts.

It helped us get our full pipeline validated and running in record time.

But as we moved toward full production workloads, we encountered specific operational challenges that prompted a strategic reassessment.

Phase 3: Transitioning for Production Scale and Cost Optimization

Once our MVP was validated, we began scaling our system with full production-level workloads. At this higher scale, we identified a few key bottlenecks that prompted us to transition to Standard EMR for our long-term solution. These points are not a critique of EMR Serverless, but rather an insight into how our specific requirements for massive scale and aggressive cost-saving principles led us to a different tool for this phase.

- Job Load Tuning Complexity: Successfully balancing performance and cost required a cautious and iterative approach. We had to carefully and iteratively tune controls at multiple levels, from application-level vCPU/RAM limits (including setting spark.dynamicAllocation.maxExecutors for each Spark job) to managing AWS account-level quotas to ensure heavy workloads didn’t stall in a PENDING state.

- API Control Plane Throttling: During peak periods, with hundreds of jobs being requested in a matter of minutes, high-frequency operations like StartJobRun and ListJobRuns frequently triggered ThrottlingException errors. The default rate limits for the EMR Serverless control plane (often as low as 1–10 transactions per second for certain actions) were immediately hit, necessitating both AWS quota increases and the implementation of client-side exponential backoff to maintain pipeline stability.

- Debugging Complexity: For our team, the fully managed nature of the serverless environment presented a hurdle in troubleshooting specific, complex issues. Without direct access to the underlying EC2 instances to verify configurations, troubleshooting was a black-box problem. For instance, tracking down a specific IAM role with a permissions issue became a time-consuming process, even leading to setting up calls with AWS support.

- Cost Structure: The Serverless Cost Mismatch: Ultimately, the cost profile proved to be the deal breaker. While the prior operational challenges could have potentially been managed, the serverless design did not support key cost-effective features, such as Spot Instances. This was critical because even after trying to tune costs with cheaper on-demand options (like Graviton instances), the lack of Spot Instance support meant we could not meet our aggressive cost-reduction strategy. Our first monthly bill confirmed that a strategic pivot was immediately required, as detailed below.

Analyzing the Production Cost Profile

The biggest challenge was the one that hit our budget. Our first monthly infra bill for production workloads was ~$22,000. The breakdown was alarming:

- EMR Serverless accounted for 60% of the cost, primarily because it doesn’t support Spot Instances.

- S3 costs accounted for another 30%, driven not just by raw storage but also by a high volume ofHeadObject andGetObject operations on our Iceberg tables.

This was unsustainable and forced us to pivot immediately, even before GA. We attacked the cost problem on three fronts, detailed below:

1. A Shift to Standard EMR (EMR on EC2)

First, we rebuilt our compute layer using Standard EMR from scratch. This gave us full control and allowed us to implement several key cost-saving principles:

- Using Spot Instances for the task fleet, with a timeout to automatically fall back to on-demand if Spot capacity was unavailable.

- Using On-Demand Reserved Instances for both the Master and Core fleets to lock in savings on our stable, core compute.

- Adding Autoscaling with an idle time-out, allowing the cluster to shrink when not in use.

- Separating EMR clusters based on job workloads, finding a balance between one giant cluster and the high cost of launching a new cluster for every single job. The answer to this question was achieved through intelligent batching.

2. Slashing S3 Storage and Operation Costs

Next, we tackled the 30% of our bill coming from S3. We added proper S3 lifecycle policies to automatically transition or expire old data. Crucially, we also added periodic maintenance on our Iceberg tables to clean and expire old snapshots. This not only reduced raw storage costs but also significantly cut down on the expensiveHeadObject andGetObject API operations.

3. Optimizing Spark with Intelligent Batching

Finally, we optimized the application layer itself. We adapted our design to batch tenant scores for 30 minutes and run them together in a single, larger Spark job, rather than running them on-demand. This change had two major benefits:

- It finished the jobs faster.

- It dramatically reduced infrastructure costs by avoiding the “spin-up/spin-down” churn of multiple, smaller runs.

We also optimized our Spark jobs where possible, ensuring they could process millions of accounts in under 30 minutes.

The results of this three-pronged attack—especially the usage of Spot Instances combined with Reserved Instances with smart autoscaling policies—were staggering. We were able to cut our total infrastructure costs from $22,000 to just $2,000 the following month, reducing our infra spend by 90%.

This shift from EMR Serverless to Standard EMR was purely driven by our need for aggressive cost control via Spot Instances. For a less cost-sensitive or smaller-scale MVP, EMR Serverless remains an excellent choice for speed-to-market.

Phase 4: The Orchestration Backbone: Temporal

The final pillar of our new architecture was workflow orchestration. Our legacy system used a basic Quartz scheduler, which wasn’t going to be powerful or reliable enough for our new service.

We made the strategic decision to adopt Temporal as our orchestration platform.

This was a massive leap forward. Temporal provides durable execution for our pipelines, guaranteeing that our complex, multi-step scoring jobs run to completion, even in the face of failures. It gives us:

- Automatic Retries: Handled out-of-the-box, removing complex error-handling logic from our application code.

- Effortless Scaling: It easily scales to handle thousands of concurrent workflows.

- Clear Monitoring: We get a “single pane of glass” view into the state of all our running pipelines.

Best of all, we were able to deploy our Temporal Workers alongside our SQS consumers in our V0, gaining this powerful orchestration layer with minimal initial cost. It has scaled beautifully and now serves as the resilient, reliable heart of our ‘Warp Speed Loader’ (WSL) service, managing all our workloads across different task queues.

Warp Speed Loader was never just about hitting 48x performance. It was about building a system that could sustain that speed in production—without letting costs spiral out of control.

By decoupling cleanly with SQS, validating quickly with EMR Serverless, pivoting to Standard EMR for Spot-driven savings, and adding durable orchestration with Temporal, we built a platform optimized for both scale and discipline.

Want to see how Warp Speed Loading can power your pipeline intelligence?

We have updated our Privacy Notice. Please click here for details.