3 Common Problems With Signal-Based Selling (And What To Do Instead)

First, what is signal-based selling? …(in theory)

Signal-based selling is a go-to-market (GTM) strategy built on the idea that every buyer and every account leaves behind a trail of digital “signals” that reveal where they are in their buying journey.

As such, if GTM teams can capture and act on those signals in real time, they can optimize outreach, eliminate wasted effort, engage in-market accounts at the perfect moment, and drive revenue with far greater efficiency.

Why the theory sounds good (and works in a way)

Signal-based selling argues that no single data point is enough on its own. Instead, it relies on combining three core categories signals into one:

- Fit signals: Who the account is.

- These are firmographic and demographic details (industry, size, region, job title) designed to tell you whether the account fits your ideal customer profile (ICP).

- Intent signals: What the account is researching.

- These come from online behavior such as reading articles, downloading reports, or searching competitor terms, showing signs of active interest in a category or solution.

- Engagement signals: How the account interacts with you.

- These include website visits, webinar attendance, email opens, or direct conversations with sales, all pointing to the level of connection with your brand.

The idea here is that when these signals are combined and interpreted correctly, you get a complete view of potential customers at both account and contact levels.

Marketing can deliver a highly personalized ad the moment buyer intent is detected. Sales can follow up with tailored outreach when engagement spikes. Customer success can intervene with expansion offers at the exact time usage increases.

To illustrate properly, here’s an example:

Let’s say a mid-sized software company in your ICP begins searching for “ABM platforms” across third-party sites (intent), a VP of Marketing from that account downloads a Demandbase whitepaper (engagement), and the company’s profile aligns with your target firmographics (fit).

In the signal-based selling model, this combination would trigger your GTM teams to act immediately. Marketing would accelerate account-based ads, while sales would reach out with a relevant case study, confident it’s the right time.

But it’s not the reality of many GTM teams

On paper, there’s no ‘logical’ way, this approach won’t work. However, most GTM teams have a constant problem with the signals they’re capturing.

For starters, the signals are often fragmented across multiple platforms. There’s also the case of low data quality that creates false positives, causing reps to approach accounts that are not in-market.

In some worse scenarios, to overcompensate for poor signals, GTM teams capture every signal, creating chaos for their sales reps.

This is not to say signal-based selling is bad. On the contrary it is good—it’s the common approach that’s broken.

Let’s look at three main problems putting teams at a disadvantage.

Related → Find the Right B2B Buyers with Accurate Intent Data

3 Core problems with signal-based selling

Problem 1: Not all signals are equal

In theory, every signal should be a valuable clue pointing toward an account’s readiness to buy. But in practice, signals vary in quality, reliability, and relevance.

So, treating all signals the same —e.g., a website visit carrying the same weight as a surge in third-party intent data— is a big mistake.

The challenge here lies in the signal-to-noise ratio. Buyers leave behind an overwhelming trail of digital footprint. They browse industry blogs, engage on social media, attend webinars, read whitepapers, click on ads, and sometimes casually visit a vendor’s website without any real buying intent.

Each of these activities generates a “signal.” However, not all of them actually mean the account is in-market or that the buying committee is moving closer to a decision.

For example, a student researching cybersecurity for a class assignment might download the same eBook as a CISO actively evaluating solutions. Both interactions register as engagement signals. Without context, a sales team could waste valuable time pursuing the student lead while overlooking the account with genuine purchase intent.

The same problem shows up with fit signals. Just because an account matches your ICP by size or industry doesn’t mean they are actively in-market. Too often, GTM teams focus on “fit” and not “readiness,” and end up wasting cycles on accounts with no real intent or engagement.

The consequence is a false sense of precision. While it looks like the revenue team is operating on ‘data-driven’ insights, they’re still as confused as they were six months back—just with more dashboards and notifications.

Related → Buyer Intent Explained: B2B Sales Signals That Convert

Problem 2: The source of signals

Where the signals actually come from matters just as much as the signals themselves. Yet, many GTM teams rely on signals with questionable origins without validating their accuracy and completeness.

Let’s look at intent signals. Many are aggregated from third-party data providers that scrape web activity across a limited network of sites. However, not all providers have the same thoroughness in how they capture and categorize intent.

Some platforms infer buying interest from weak signals (e.g., reading a generic article online), which can inflate the number of “in-market” accounts.

Another challenge is data freshness. Some sources update in near real-time, while others may reflect activity from weeks ago. For sales and marketing teams trying to act at the right moment, outdated signals create a timing mismatch that frustrates both sellers and buyers.

Even within first-party data, source quality varies. A high-intent demo request carries far more predictive weight than a quick bounce from a blog page. But without differentiating these sources, GTM teams may treat both as equal “engagement signals.” And when they eventually reach out, buyers perceive their messages as irrelevant and spammy.

Related → Researching Accounts Using Signals

Problem 3: The “more is better” fallacy

This is partly the fault of GTM leaders who believe that the more signals they collect, the better their outcomes will be. The logic is “if one intent signal is useful, then ten must be even more valuable.”

One tiny detail they’re missing is— signals don’t scale linearly in value. As such, adding more won’t necessarily improve precision or add more revenue.

Look at it like this: if you track an account across dozens of different interactions (website clicks, ad impressions, social engagements, webinar signups), the sheer volume can make it appear ‘highly active.’

Vendors fuel this fallacy by boasting about scale. Many proudly advertise having “the world’s largest B2B database” with hundreds of millions of contacts. It sounds impressive, but for most B2B companies selling complex solutions, it’s irrelevant to their sales process.

If you’re targeting 5,000-10,000 accounts over the next few years, and each has a buying committee of 15 people, that’s roughly 150,000 contacts that matter. Your success hinges on the accuracy and depth of intelligence on those individuals.

Another aspect is teams accepting low-quality signals just to increase coverage.

For example, a marketing leader might get excited about intent data spanning vast numbers of small businesses. But at that scale, high-fidelity intent is nearly impossible to capture, process, segment, analyze and route to workflows.

As Demandbase CEO, Gabe Rogol puts it— what you’re actually buying is “a list of businesses that basically have a pulse.” [Read the post]

Those weak signals are then passed to sales, overwhelming reps and diluting sales efforts with unqualified accounts. This ends up undermining the very efficiency signal-based selling was supposed to deliver.

Read case study → From leads to accounts: How Thoughtworks transformed their sales strategy with Demandbase

Doing signal-based selling the right way

Define what matters for each GTM motion

The first step to fixing the “not all signals are equal” problem is to recognize that the importance of a signal isn’t universal. In fact, it depends on the specific GTM motion you’re running.

A signal that’s highly valuable for net-new acquisition might be irrelevant for expansion, and a signal that drives mid-market velocity deals could be meaningless in an enterprise context.

As Ankush Gupta, founder of Eventible.com, aptly put it, —

“It’s not about finding the perfect signal. It’s about matching the right kind of insight to the job you’re trying to do.” [Read the post]

Yet many teams dump everything into one giant “active accounts” bucket and wonder why sales keeps missing the mark.

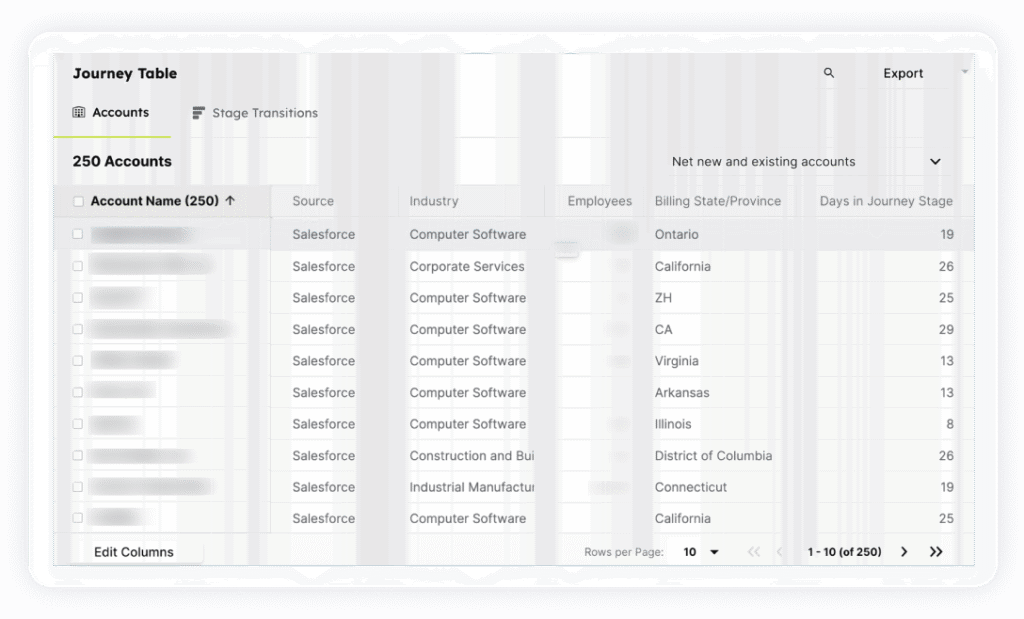

To break this cycle, you need to define what actually matters for each GTM motion. That means mapping the types of signals (fit, intent, and engagement) to the outcomes you’re trying to drive in each motion:

- For acquisition motions, prioritize fit and early intent signals.

- For example, if your ICP is mid-market fintech companies, you should weigh heavily when a decision-maker from that segment starts consuming third-party content on compliance automation, even if they haven’t touched your website yet.

- For retention and expansion, zero in on product usage and customer success signals.

- Declining logins or spikes in support tickets might point to churn risk, while adoption of advanced features or requests for additional integrations can signal upsell opportunities.

- For pipeline acceleration, focus on late-stage engagement signals.

- Pricing page visits, case studies, product demo requests, or multiple buying committee members returning to your site are far more valuable here than a single webinar attendance.

DB Nuggets: Weight signals by impact

Assign scoring weights based on which signals historically correlate with pipeline movement.

For example, you might learn that three stakeholders visiting your pricing page is a stronger predictor of opportunity creation than any single content download.

Related → How AI Automation Doubled Our SDR Opportunity Creation

Scrutinize and consolidate your data sources

As we discussed earlier, the origin of your signal matters. And when you pull in data from every third-party provider, intent network, and publisher you can find, you create a ‘data sprawl’ problem.

That’s why consolidation is important. By curating a smaller set of high-quality, validated sources, you create a cleaner, more reliable foundation for predictive insights.

And here’s how you can do it in three easy steps:

Audit every signal source you use

Start by listing all your current data providers and internal systems feeding buying signals into your GTM tech stack. Then evaluate three aspects:

- Accuracy: How often does this source’s data correlate with actual revenue outcomes in your CRM?

- Transparency: Do you know how this data is collected, refreshed, and scored? Or is it a ‘black box’?

- Relevance: Is the data aligned with your ICP and buying committee personas?

Prioritize first-party data

Your own first-party data (website analytics, product usage, CRM activity, support logs) is often the most reliable because it comes directly from customer interactions with your brand.

It’s also highly specific to your ICP. First-party data should always be the foundation of your signal strategy.

For example, website pricing page visits or declining feature usage are much more predictive of real buying or churn behavior than generic “topic interest” signals purchased from a third-party source.

Be selective with third-party providers

Third-party intent data can be valuable, but it’s where most of the noise comes from. Instead of plugging in multiple providers indiscriminately, choose one or two that can:

- Show keyword-level transparency (“this account is researching X”)

- Provide near-real-time updates (daily or real-time)

- Align their publisher networks with your ICP (enterprise vs. SMB, technical vs. business audiences)

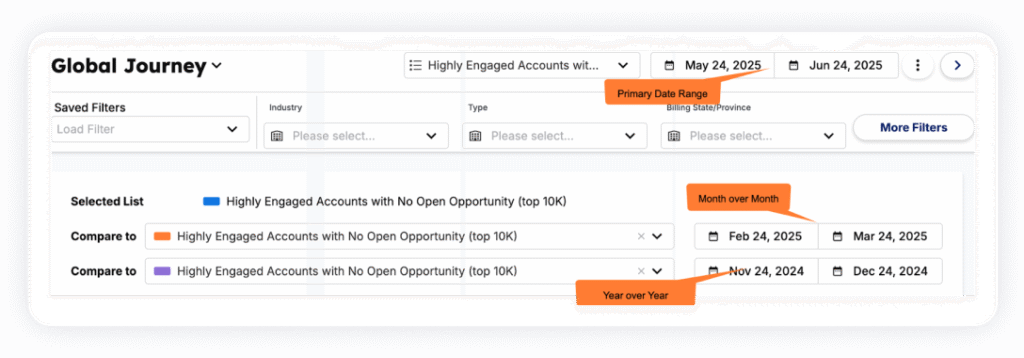

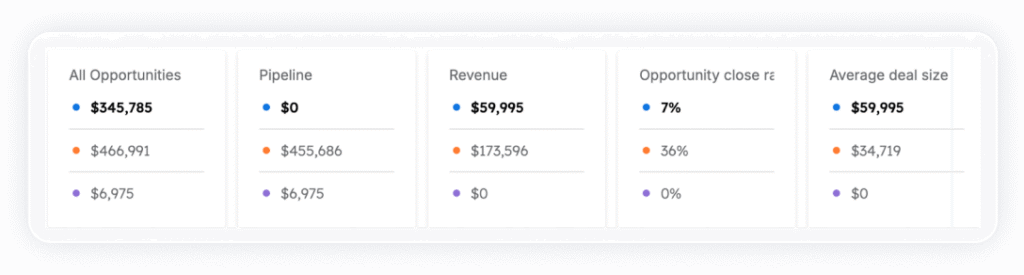

DB Nuggets: Centralize into a single source of truth

Feed all validated signal sources into a central GTM intelligence platform (e.g., Demandbase). This ensures marketing, sales, and success teams are all working from the same clean, consolidated data.

“The foundation of a modern strategy is bringing together 1st and 3rd [party data] in a way that’s clean, accessible, and utilized by all functions (including non-humans).”

Gabe Rogol, CEO, Demandbase.

Read case study → How Zuora transformed their go-to-market strategy with Demandbase

Related → Why Demandbase is the Leader in Intent and ABM Solutions?

Adopt a “depth over breadth” mindset

This means valuing the quality, consistency, and context of signals over sheer volume.

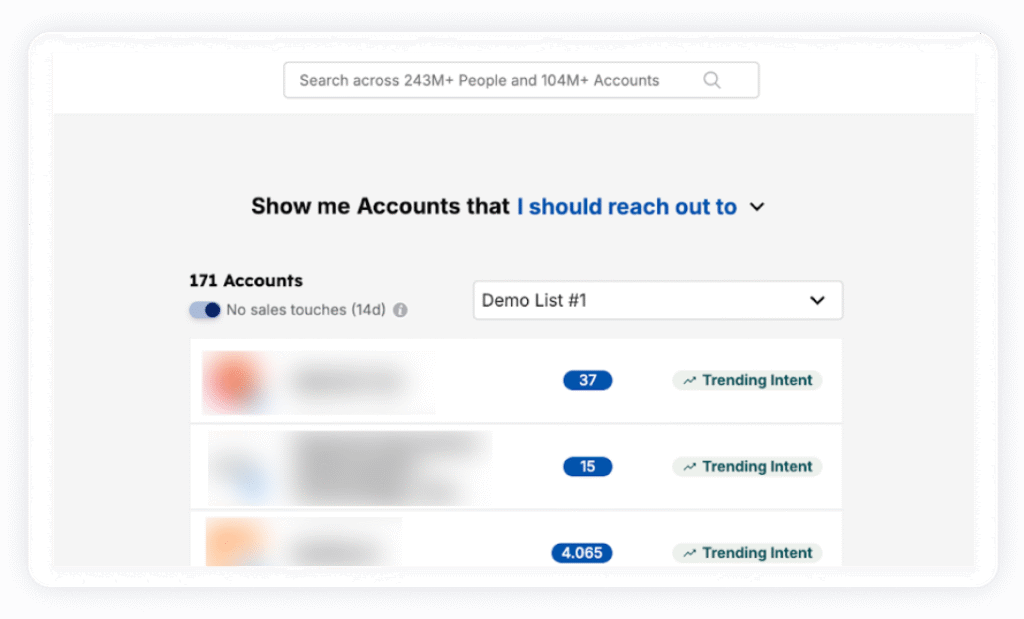

That’s prioritizing accounts that show layered patterns of engagement: multiple personas involved, recurring visits to decision-stage content, or a spike in activity tied to a specific pain point.

For example, a single whitepaper download doesn’t mean much. But when that same account also shows surging intent on third-party networks and multiple visits to your pricing page, the combined depth creates a compelling picture of readiness.

This approach also protects GTM teams from ‘operational overload.’ They no longer have to ‘drown’ in analyzing millions of weak signals. Now they can work with a smaller, more reliable dataset that’s easier to normalize, score, and act upon.

DB Nuggets: Focus campaigns on a shortlist of high-depth accounts

Rather than targeting hundreds of “active” accounts shallowly, concentrate budget and resources on the 10-20% showing the deepest signals. You’ll see higher conversion rates and less wasted spend.

Related → Elevating Your Sales Strategy with Demandbase One for Sales

Build a proprietary playbook to create an advantage

Signals on their own don’t create a competitive advantage because your competitors often have access to the same data.

What really makes the difference is how you interpret those signals and what you do with them.

That’s why GTM leaders need to build a proprietary playbook. A repeatable, documented system that turns raw signals into revenue-driving actions unique to your business.

With this, your GTM team knows:

- Which signals matter most for acquisition, acceleration, and expansion.

- How to act when a signal fires: whether that means a tailored outreach, a targeted campaign, or a proactive retention play.

- What success looks like, with key metrics, and feedback loops to refine the process over time.

For example, your acquisition playbook might dictate:

- Tier 1 signals: A VP-level buyer from a target account visits your pricing page three times in a week.

- Action: SDR sends a personalized outbound sequence within 24 hours, referencing pricing and positioning your value against competitors.

- Tier 2 signals: A mid-level manager from the same account downloads an industry trends report.

- Action: Marketing nurtures with targeted content until higher-intensity engagement appears.

Your expansion playbook, on the other hand, could focus on signals like increased feature adoption, multiple new seats activated, or executive-level engagement in QBRs. Each of these would trigger specific upsell or cross-sell plays.

How to build a proprietary playbook

- Audit past wins and losses: Look back at closed-won and closed-lost deals. What patterns of signals consistently appeared in successful deals? What signals looked promising but went nowhere? These insights form the foundation of your rules of engagement.

- Map signals to stages of the buyer journey: Not all signals should trigger the same response.

- Top-of-funnel: Content downloads, light research. → Nurture campaigns.

- Mid-funnel: Pricing page visits, multiple stakeholders engaging. → SDR outreach.

- Bottom-of-funnel: Direct competitor comparisons, proposal downloads. → AE engagement with tailored offer.

- Turn these plays into standard operating procedures (SOPs): Document what happens when each signal (or set of signals) appears.

- Example: If three decision-makers from an ICP account attend a webinar and one downloads a case study, the SDR team executes Play #17: personalized LinkedIn outreach sequence, reinforced with an ABM ad campaign.

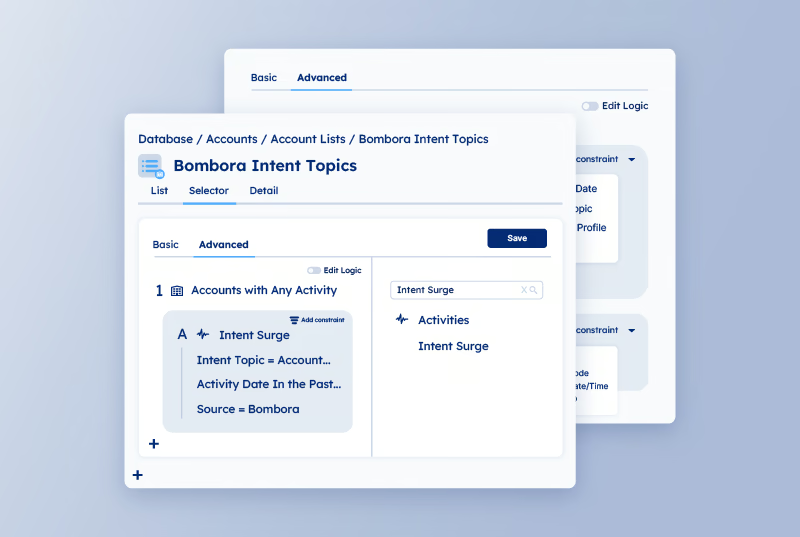

- Embed the playbook in tools: Integrate the playbook into your CRM, intent platform, or orchestration tool so the right play is surfaced automatically when the signal is detected.

Build and execute your playbook with Demandbase

Most platforms will flood you with signals and leave the execution up to you. Demandbase goes further by enabling you to operationalize your playbooks directly inside the platform.

Most platforms will flood you with signals and leave the execution up to you. Demandbase goes further by enabling you to operationalize your playbooks directly inside the platform.

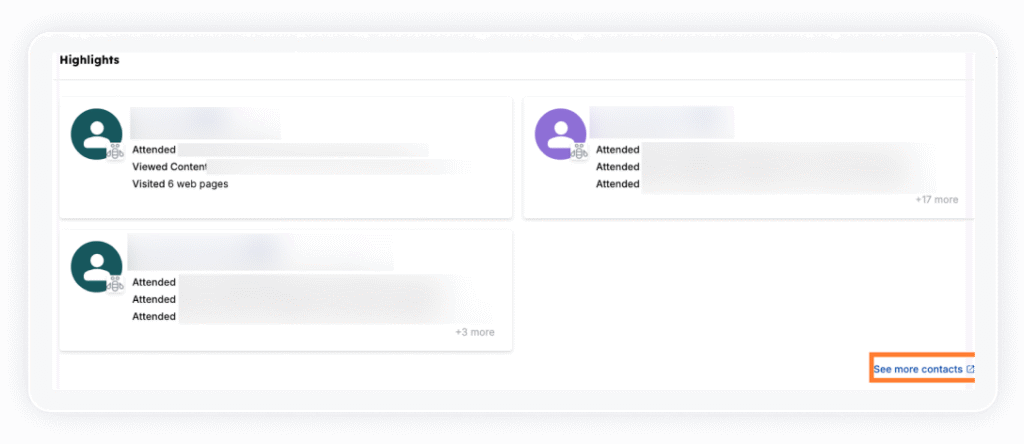

For example, when multiple stakeholders from a target account show late-stage intent, Demandbase can automatically:

- Trigger an ABM ad campaign,

- Alert the assigned sales rep with context-rich insights, and

- Feed success teams signals to prepare supporting materials.

According to Jonathan Roberts, Account Executive at Fivetran —

According to Jonathan Roberts, Account Executive at Fivetran —

“With Demandbase, I get real-time updates and triggers, highlighting exactly what my prospective customers are interested in, allowing me to frame each and every interaction with them based on their desired outcomes. It’s like having a crystal ball into their goals without me having to pry it out of them… truly life-changing!”

The right signals and the right accounts. Only with Demandbase.

Demandbase redefines signal-based selling by helping you identify who’s in your market and ready to buy. It ensures that every ad, every campaign, and every outreach effort is aligned to drive measurable revenue impact.

And we don’t just say this lightly. For example, one of our customers, League, used Demandbase to increase their meeting bookings by 41%.

Jared Levy, Growth Marketing Manager, League, when speaking on his experience using Demandbase said:

“With Demandbase, we effectively transformed advertising spend into qualified opportunities. Through precision targeting and actionable insights, we’ve strengthened cross-functional alignment, accelerated pipeline growth, and delivered measurable impact in the areas that matter most.”

But that’s not all. There’s even more for you to gain by using Demandbase:

- Confidence in your targeting: You don’t have to guess which accounts to go after. Demandbase pinpoints the right buyers at the right stage, so your marketing and sales motions always hit where they’re most likely to convert.

- Alignment between teams: One of the biggest roadblocks in B2B is the gap between sales and marketing. Demandbase bridges that gap by giving both sides a single, trusted view of the accounts that matter. This alignment means fewer handoff issues and more momentum in closing deals.

- Smarter use of your budget: Marketing budgets are always under pressure. With Demandbase, every dollar goes further because you’re focusing on accounts that are interested. This means higher ROI and less wasted spend.

- Better customer experiences: Instead of bombarding prospects with generic outreach, Demandbase helps you deliver personalized, relevant messages that match where buyers are in their journey.

- Predictable growth: Perhaps the biggest benefit of all is predictability. With Demandbase guiding your focus, your pipeline becomes more strategic. You can forecast with greater accuracy and build growth that’s sustainable.

And don’t just take it from us, Jamie Flores, Director of CRM, Baker Tilly said it best —

“Demandbase gave us the confidence to build industry dashboards that leaders use daily. We simply could not have done that without trustworthy data flowing in.”

Related content

We have updated our Privacy Notice. Please click here for details.